TECHNOLOGY

TransDS: Adaptive Data Framework for Modern Systems

TransDS (Transitional Data Systems) is a modern data management framework that treats data as a dynamic, adaptable asset rather than a static, isolated resource. It enables seamless data movement across systems in real-time, supports multi-format adaptability, and maintains context and integrity throughout the data lifecycle. Designed for interoperability, security, and scalability, TransDS helps organizations unify, transform, and leverage data more intelligently.

In an era where real-time insights and cross-platform functionality are essential, TransDS offers a forward-thinking approach to data architecture. By breaking down traditional silos and promoting data fluidity, it empowers businesses to make smarter, faster, and more flexible decisions.

Understanding the Philosophy behind TransDS

TransDS is redefining the normative data management implementation, preventing data as objects that exist, but not living. It changes the emphasis to mobility, flexibility, and context so that data is ready to meet your needs at a given time, anywhere and everywhere.

This method also aligns with the requirements of a contemporary digital ecosystem in which agility and integration are crucial. The TransDS allows non-trivial data transfer between systems so that decisions can be made more intelligently, flexibly, and efficiently at all levels.

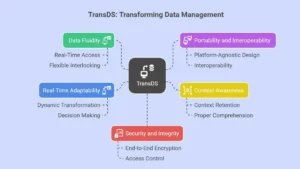

How TransDS Transforms Data Management

1. Data Fluidity

TransDS fluidity of data allows the data to be moved easily between systems or formats, or platforms without a hitch. It provides real-time access, flexible and interlocking with the ability of data to move where it is required, in its form and structure, without getting damaged or losing its relevance to business.

2. Portability and Interoperability

TransDS makes data transfer between a variety of systems and structures easy. Due to its platform-agnostic design, it allows for interoperability by making it easier to apply data in different environments and retain its meaning without any transformation.

3. Context-Awareness

The framework also retains the information and context of data through its context, use, therefore upholding proper comprehension, prudent intervention on various platforms, formats, and systems.

4. Real-Time Adaptability

TransDS allows data to transform dynamically over time, form, and conditions. This real-time elasticity enables flexibility in decision making, blending, and effective operation in vibrant digital Vintages.

5. Security and Integrity

In the modern environment, data security is a must. TransDS ensures trust even at high-speed, high-volume by incorporating end-to-end encryption, access control, and data integrity protocols. The data remains the original data with the original meaning and value, no matter how many systems it transfers to.

TRENDING: NS Mainframe: High Performance for Big Workloads

Strategic Advantages

1. Enhanced Decision-Making

TransDS would have the effect of empowering organizations with data that is timely, context-rich, and supports more accurate and faster decisions. It facilitates strategic, informed, and adroit decision-making by removing silos and enabling real-time observations.

2. Accelerated Innovation

The limitations in access to data stop being an issue once the developers and the data scientists get to use TransDS. The fluidity and interoperability of the framework promote experimentation and prototyping, and invention, and hence reduce time-to-market on the new services and products.

3. Operational Efficiency

A large manual effort and technical overhead costs of integrating and transforming data and reconciliation are greatly reduced by TransDS. Organizations will be able to unify operations, minimize redundancies, and enhance general data quality and governance.

4. Scalable Analytics and AI Readiness

Spending on analytics and AI requires increasing data across sources, and it offers a scalable and highly efficient data pipeline backbone. This versatility and real-time movement of data are essential factors in driving AI/ML models, real-time dashboards, and workflows.

TransDS vs. Traditional Data Architectures

| Feature | Traditional Data Architectures | TransDS (Transitional Data Systems) |

| Data Movement | Manual, batch-based, and delayed | Real-time, seamless, and automated |

| Format Flexibility | Rigid and format-dependent | Adaptive to multiple formats and schemas |

| Interoperability | Limited cross-platform compatibility | Native cross-platform and cross-environment integration |

| Context Awareness | Minimal, often lost during transfer | Maintains contextual meaning and relevance |

| Scalability | Complex and costly to scale | Easily scalable across systems and environments |

| Security & Integrity | Varies often requires additional layers | Built-in encryption, access control, and integrity checks |

Use Cases Across Industries

- Healthcare: Seamless integration of patient records, diagnostic data, and wearable device outputs while maintaining compliance with HIPAA regulations.

- Finance: Real-time fraud detection, risk assessment, and customer behavior analysis across multiple banking systems.

- Manufacturing: Predictive maintenance, supply chain optimization, and IoT-driven quality control in smart factories.

- Retail: Personalized recommendations, dynamic inventory tracking, and omnichannel customer engagement.

- Government: Inter-agency data sharing, crisis management, and public service delivery without compromising data security.

Lifecycle Data Security

As data becomes more mobile and interconnected, security and governance become paramount. The framework integrates robust security protocols at every stage of the data lifecycle:

- End-to-end encryption: ensures data integrity and confidentiality in transit and at rest

- Access control and policy management: governs who can access or modify data

- Auditability: maintains detailed logs for compliance and forensic analysis

Final Thought

TransDS changes a data resource that is static into an intelligent, dynamic resource. In helping organizations move data securely, in real time, and in context across platforms, it enables organizations to be more agile and insightful. In the data-driven world, a future-ready and efficient digital transformation strategy is something that TransDS must adopt.

-

BIOGRAPHY9 months ago

BIOGRAPHY9 months agoBehind the Scenes with Sandra Orlow: An Exclusive Interview

-

HOME1 year ago

HOME1 year agoDiscovering Insights: A Deep Dive into the //vital-mag.net blog

-

HOME1 year ago

HOME1 year agoSifangds in Action: Real-Life Applications and Success Stories

-

BIOGRAPHY1 year ago

BIOGRAPHY1 year agoThe Woman Behind the Comedian: Meet Andrew Santino Wife